Whether you suppose synthetic intelligence will save the world or finish it, you’ve got Geoffrey Hinton to thank. Hinton has been referred to as “the Godfather of AI,” a British pc scientist whose controversial concepts helped make superior synthetic intelligence potential and, so, modified the world. As we first reported final yr, Hinton believes that AI will do huge good however, tonight, he has a warning. He says that AI methods could also be extra clever than we all know and there is a probability the machines may take over. Which made us ask the query:

Scott Pelley: Does humanity know what it is doing?

Geoffrey Hinton: No. I believe we’re shifting right into a interval when for the primary time ever we could have issues extra clever than us.

Scott Pelley: You imagine they will perceive?

Geoffrey Hinton: Yes.

Scott Pelley: You imagine they’re clever?

Geoffrey Hinton: Yes.

Scott Pelley: You imagine these methods have experiences of their very own and may make selections primarily based on these experiences?

Geoffrey Hinton: In the identical sense as individuals do, sure.

Scott Pelley: Are they acutely aware?

Geoffrey Hinton: I believe they most likely do not have a lot self-awareness at current. So, in that sense, I do not suppose they’re acutely aware.

Scott Pelley: Will they’ve self-awareness, consciousness?

Geoffrey Hinton: Oh, sure.

Scott Pelley: Yes?

Geoffrey Hinton: Oh, sure. I believe they are going to, in time.

Scott Pelley: And so human beings would be the second most clever beings on the planet?

Geoffrey Hinton: Yeah.

60 Minutes

Geoffrey Hinton instructed us the unreal intelligence he set in movement was an accident born of a failure. In the Nineteen Seventies, on the University of Edinburgh, he dreamed of simulating a neural community on a pc— merely as a instrument for what he was actually studying–the human mind. But, again then, nearly nobody thought software program may mimic the mind. His Ph.D. advisor instructed him to drop it earlier than it ruined his profession. Hinton says he failed to determine the human thoughts. But the lengthy pursuit led to a man-made model.

Geoffrey Hinton: It took a lot, for much longer than I anticipated. It took, like, 50 years earlier than it labored nicely, however ultimately it did work nicely.

Scott Pelley: At what level did you understand that you simply had been proper about neural networks and most everybody else was incorrect?

Geoffrey Hinton: I at all times thought I used to be proper.

In 2019, Hinton and collaborators, Yann Lecun, on the left, and Yoshua Bengio, received the Turing Award– the Nobel Prize of computing. To perceive how their work on synthetic neural networks helped machines be taught to be taught, allow us to take you to a recreation.

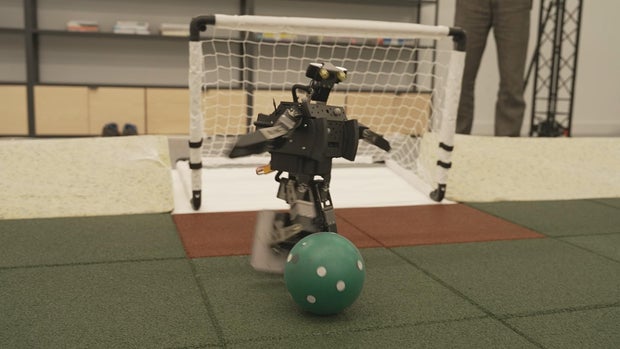

This is Google’s AI lab in London, which we first confirmed you final yr. Geoffrey Hinton was not concerned on this soccer venture, however these robots are an awesome instance of machine studying. The factor to know is the robots had been not programmed to play soccer. They had been instructed to attain. They needed to learn the way on their very own.

In common, here is how AI does it. Hinton and his collaborators created software program in layers, with every layer dealing with a part of the issue. That’s the so-called neural community. But that is the key: when, for instance, the robotic scores, a message is distributed again down by way of the entire layers that claims, “that pathway was proper.”

Likewise, when a solution is incorrect, that message goes down by way of the community. So, right connections get stronger. Wrong connections get weaker. And by trial and error, the machine teaches itself.

Scott Pelley: You suppose these AI methods are higher at studying than the human thoughts.

Geoffrey Hinton: I believe they might be, sure. And at current, they’re rather a lot smaller. So even the largest chatbots solely have a few trillion connections in them. The human mind has about 100 trillion. And but, within the trillion connections in a chatbot, it is aware of way over you do in your hundred trillion connections, which suggests it is acquired a significantly better approach of getting data into these connections.

–a significantly better approach of getting data that is not absolutely understood.

Geoffrey Hinton: We have an excellent thought of kind of roughly what it is doing. But as quickly because it will get actually sophisticated, we do not really know what is going on on any greater than we all know what is going on on in your mind.

Scott Pelley: What do you imply we do not know precisely the way it works? It was designed by individuals.

Geoffrey Hinton: No, it wasn’t. What we did was we designed the training algorithm. That’s a bit like designing the precept of evolution. But when this studying algorithm then interacts with information, it produces sophisticated neural networks which might be good at doing issues. But we do not actually perceive precisely how they do these issues.

Scott Pelley: What are the implications of those methods autonomously writing their very own pc code and executing their very own pc code?

Geoffrey Hinton: That’s a critical fear, proper? So, one of many methods by which these methods would possibly escape management is by writing their very own pc code to switch themselves. And that is one thing we have to critically fear about.

Scott Pelley: What do you say to somebody who would possibly argue, “If the methods grow to be malevolent, simply flip them off”?

Geoffrey Hinton: They will be capable of manipulate individuals, proper? And these can be excellent at convincing individuals ‘trigger they will have realized from all of the novels that had been ever written, all of the books by Machiavelli, all of the political connivances, they will know all that stuff. They’ll know how you can do it.

60 Minutes

‘Know how,’ of the human sort runs in Geoffrey Hinton’s household. His ancestors embody mathematician George Boole, who invented the premise of computing, and George Everest who surveyed India and acquired that mountain named after him. But, as a boy Hinton himself, may by no means climb the height of expectations raised by a domineering father.

Geoffrey Hinton: Every morning after I went to high school he’d really say to me, as I walked down the driveway, “get in there pitching and possibly once you’re twice as previous as me you may be half nearly as good.”

Dad was an authority on beetles.

Geoffrey Hinton: He knew much more about beetles than he knew about individuals.

Scott Pelley: Did you’re feeling that as a baby?

Geoffrey Hinton: A bit, sure. When he died, we went to his research on the college, and the partitions had been lined with packing containers of papers on totally different sorts of beetle. And simply close to the door there was a barely smaller field that merely stated, “Not bugs,” and that is the place he had all of the issues in regards to the household.

Today, at 76, Hinton is retired after what he calls 10 glad years at Google. Now, he is professor emeritus on the University of Toronto. And, he occurred to say, he has extra tutorial citations than his father. Some of his analysis led to chatbots like Google’s Bard, which we met final yr.

Scott Pelley: Confounding, completely confounding.

We requested Bard to put in writing a narrative from six phrases.

Scott Pelley: For sale. Baby sneakers. Never worn.

Scott Pelley: Holy Cow! The sneakers had been a present from my spouse, however we by no means had a child…

Bard created a deeply human story of a person whose spouse couldn’t conceive and a stranger, who accepted the sneakers to heal the ache after her miscarriage.

Scott Pelley: I’m hardly ever speechless. I do not know what to make of this.

Chatbots are stated to be language fashions that simply predict the following almost definitely phrase primarily based on chance.

Geoffrey Hinton: You’ll hear individuals saying issues like, “They’re simply doing auto-complete. They’re simply attempting to foretell the following phrase. And they’re simply utilizing statistics.” Well, it is true they’re simply attempting to foretell the following phrase. But if you concentrate on it, to foretell the following phrase you must perceive the sentences. So, the concept they’re simply predicting the following phrase so they don’t seem to be clever is loopy. You must be actually clever to foretell the following phrase actually precisely.

To show it, Hinton confirmed us a take a look at he devised for ChatGPT4, the chatbot from an organization referred to as OpenAI. It was kind of reassuring to see a Turing Award winner mistype and blame the pc.

Geoffrey Hinton: Oh, rattling this factor! We’re going to return and begin once more.

Scott Pelley: That’s OK

Hinton’s take a look at was a riddle about home portray. An reply would demand reasoning and planning. This is what he typed into ChatGPT4.

Geoffrey Hinton: “The rooms in my home are painted white or blue or yellow. And yellow paint fades to white inside a yr. In two years’ time, I’d like all of the rooms to be white. What ought to I do?”

The reply started in a single second, GPT4 suggested “the rooms painted in blue” “have to be repainted.” “The rooms painted in yellow” “needn’t [be] repaint[ed]” as a result of they might fade to white earlier than the deadline. And…

Geoffrey Hinton: Oh! I did not even consider that!

It warned, “in the event you paint the yellow rooms white” there is a danger the colour is likely to be off when the yellow fades. Besides, it suggested, “you would be losing sources” portray rooms that had been going to fade to white anyway.

Scott Pelley: You imagine that ChatGPT4 understands?

Geoffrey Hinton: I imagine it positively understands, sure.

Scott Pelley: And in 5 years’ time?

Geoffrey Hinton: I believe in 5 years’ time it could nicely be capable of purpose higher than us.

Reasoning that he says, is resulting in AI’s nice dangers and nice advantages.

Geoffrey Hinton: So an apparent space the place there’s big advantages is well being care. AI is already comparable with radiologists at understanding what is going on on in medical pictures. It’s gonna be excellent at designing medication. It already is designing medication. So that is an space the place it is nearly totally gonna do good. I like that space.

60 Minutes

Scott Pelley: The dangers are what?

Geoffrey Hinton: Well, the dangers are having an entire class of people who find themselves unemployed and never valued a lot as a result of what they– what they used to do is now finished by machines.

Other fast dangers he worries about embody faux information, unintended bias in employment and policing and autonomous battlefield robots.

Scott Pelley: What is a path ahead that ensures security?

Geoffrey Hinton: I do not know. I– I can not see a path that ensures security. We’re coming into a interval of nice uncertainty the place we’re coping with issues we have by no means handled earlier than. And usually, the primary time you cope with one thing completely novel, you get it incorrect. And we will not afford to get it incorrect with these items.

Scott Pelley: Can’t afford to get it incorrect, why?

Geoffrey Hinton: Well, as a result of they may take over.

Scott Pelley: Take over from humanity?

Geoffrey Hinton: Yes. That’s a chance.

Scott Pelley: Why would they wish to?

Geoffrey Hinton: I’m not saying it’s going to occur. If we may cease them ever eager to, that will be nice. But it is not clear we will cease them ever eager to.

Geoffrey Hinton instructed us he has no regrets due to AI’s potential for good. But he says now is the second to run experiments to know AI, for governments to impose laws and for a world treaty to ban the usage of army robots. He reminded us of Robert Oppenheimer who after inventing the atomic bomb, campaigned in opposition to the hydrogen bomb–a man who modified the world and located the world past his management.

Geoffrey Hinton: It could also be we glance again and see this as a type of turning level when humanity needed to make the choice about whether or not to develop these items additional and what to do to guard themselves in the event that they did. I do not know. I believe my foremost message is there’s huge uncertainty about what’s gonna occur subsequent. These issues do perceive. And as a result of they perceive, we have to suppose laborious about what is going on to occur subsequent. And we simply do not know.

Produced by Aaron Weisz. Associate producer, Ian Flickinger. Broadcast affiliate, Michelle Karim. Edited by Robert Zimet.